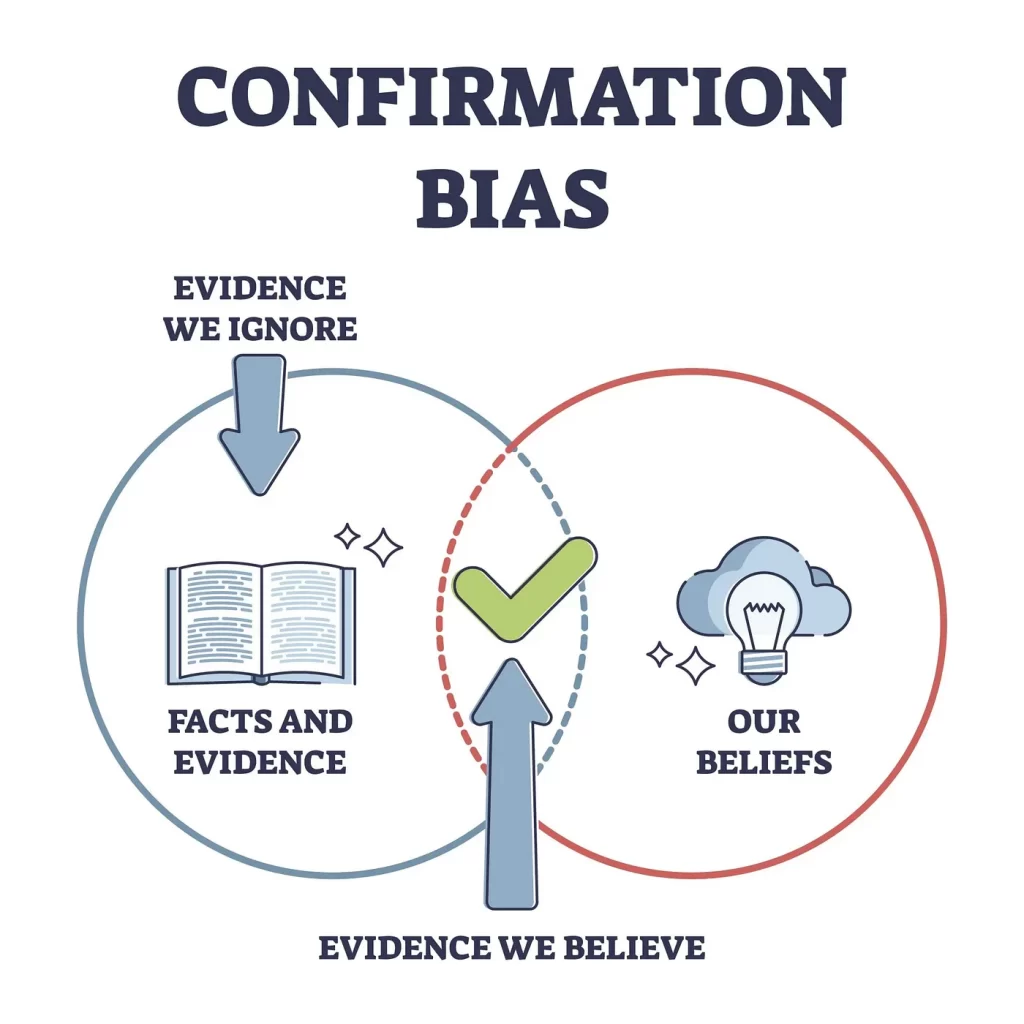

The Internet is an immense reservoir of knowledge. When seeking information, the majority of individuals turn to the Internet. It serves as a vast repository of wisdom, offering a wealth of resources, including articles, blog posts, expert insights, surveys, academic papers, and more, covering nearly every conceivable topic. However, this abundant resource carries the risk of confirmation bias, where individuals reinforce their existing beliefs by consuming biased information. This danger is particularly universal in subjects like health and diet, where one can easily find articles supporting or refuting various claims.

Confirmation bias

image source : https://www.simplypsychology.org/confirmation-bias.html

Bias, in various forms, has been long ingrained in our culture and history since very early if mankind. With the advent of the internet, even search engine algorithms exhibit biases. Search engines tailor their results to provide information that aligns with your preferences, without your awareness of the underlying factors, such as your likes, dislikes, and ideological standpoint. To quote Warren Buffett, “What human beings excel at is interpreting new information to reaffirm their prior convictions.”

This article describes my reflections on one of the major challenges of the internet era where there is no control over the information available to everyone. The ease of information availability makes people more biased and isolated by their ideologies.

Why Confirmation Bias Poses a Challenge:

Challenging one’s own thought processes or beliefs can be an uncomfortable endeavor for individuals. It is deeply ingrained in human nature to seek validation and prove oneself right at every turn. In the age of the internet, a vast sea of information is readily accessible to support and reinforce personal beliefs, often with far-reaching consequences for society. This phenomenon has had a profound and, at times, catastrophic impact on our collective well-being.

In today’s world, whether an ideology benefits society or not, those who subscribe to it are constantly bombarded with a barrage of materials such as news, articles, posts, speeches, and more via the internet and social media. This unrelenting reinforcement of their beliefs has given rise to the alarming trend of radicalizing individuals for political or religious purposes, all the more prevalent due to the ubiquitous presence of the internet and social media on mobile devices. As a result, individuals are more inclined to embrace fake news if it aligns with their preconceived ideologies, often at the expense of accepting verifiable, objective information.

Consider, for instance, the notion of a flat Earth, which lacks scientific substantiation. Despite the absence of empirical evidence, there exists a multitude of articles, videos, and posts that lend credence to this theory, along with a dedicated following who proudly identify themselves as “The Flat Earth Society.”

Similarly, a group of individuals denies the existence of issues like “global warming” or “climate change,”. They think these ideas are made up to control various aspects of how we live. Their convictions grow stronger as they are continually exposed to content over the internet that validates their belief system. The sheer ease of accessing such information further perpetuates the trend of isolating individuals, making it increasingly simple to manipulate, and radicalize people based on various not-scientifically proven claims. Paradoxically, the internet, often regarded as a boundless source of knowledge, can, in practice, foster ignorance rather than enlightenment.

Note: I am not a whistle-blower in this matter by the way😉

Case Study 1:

A paper published by R. DuBroff in Nov 2017, highlights — how an expert opinion can, too, be confirmation-biased and therefore can not be trusted. The Paper illustrates 6 examples of confirmation bias that appear to distort the evidence to substantiate the opinions and recommendations of experts. This sounds scary, isn’t it? The experts in these cases tried to collect the “only” data points that proved their theory and ignored the rest. Chances of such unreliable information, recommendations, and opinions are increasingly high. Confirmation bias is one of the main reasons for this.

Case Study 2:

This study was done quite recently. This was published in Nov 2021 illustrating how confirmation bias views on the internet induced polarization during Covid 19. This study draws on how manifestations of confirmation bias contribute to the development of echo chambers in supply chain information sharing during the COVID-19 pandemic. While social media played a big role in providing a helping hand to the needy, one can not deny its role in polarizing the views on the COVID-19 pandemic.

Here I am quoting, as-is, the abstract from this case study published.

Social media has played a pivotal role in polarising views on politics, climate change, and more recently, the Covid-19 pandemic. Social media induced polarisation (SMIP) poses serious challenges to society as it could enable ‘digital wildfires’ that can wreak havoc worldwide. While the effects of SMIP have been extensively studied, there is limited understanding of the interplay between two key components of this phenomenon: confirmation bias (reinforcing one’s attitudes and beliefs) and echo chambers (i.e., hear their own voice). This paper addresses this knowledge deficit by exploring how manifestations of confirmation bias contributed to the development of ‘echo chambers’ at the height of the Covid-19 pandemic. Thematic analysis of data collected from 35 participants involved in supply chain information processing forms the basis of a conceptual model of SMIP and four key cross-cutting propositions emerging from the data that have implications for research and practice.

My observation:

During the COVID-19 pandemic, there was so much information being shared about the coronavirus, its origin, Vaccines, etc. I could broadly categorize my surroundings into two groups —

- one who believed the coronavirus pandemic was a political stunt across the world by the big political leaders and corporations.

- The second was too scared and considered it the end of the world.

There was a massive information circulated over social media to form your opinion and reinforce it in whichever group you want to belong to. The “truth”, perhaps, lies in-between/far from the above two beliefs.

During the pandemic, there was enough content being produced over the internet and social media to support the beliefs of both groups. One can form one’s opinion and reinforce it in whichever group one wants to belong to. But the “truth”, perhaps, lies in-between/far from the above two beliefs. During the year 2020–21, the Pandemic caused a huge loss in terms of human life as well as the economy. There was so much loss due to the misinformation being shared across social media platforms and tools such as Facebook, Twitter, wiki, etc. Due to the misinformation shared about the virus, vaccinations, precautionary measures, etc., huge effort had to be spent in order to convince people to follow them.

Discussion

In this article, mostly, I have been discussing and highlighting all the challenges that occur due to Confirmation Bias. The impact of confirmation bias has much more than I have outlined here. Although I do not have a perfect solution to eradicate the confirmation bias view from the internet, before thinking of a solution, I would like to categorize this bias into different levels where they are induced and how could “possibly” be reduced by using Knowledge Management tools such as Semantic Web, Linked Open Data Knowledge repository. Although the removal of confirmation bias completely is close to impossible, the following are a few solutions that can be tried out to reduce the confirmation-biased information available over the internet.

Possible Solutions

1. Search Engine’s Algorithms and Open LinkedData

The very first category is “serving content to the users based on their likes and dislikes”. At first, however, it may sound like a great feature but not always.

An Example: You like or admire a political ideology called “X”. You simply agree with a few of their actions in the past and hence you incline this particular ideology “X”. Search engines, social media platforms, etc. start serving you content that directly or indirectly supports the same ideology. Eventually, it will condition you to become a believer, simply because you were exposed to only those contents which are in favor of “X”. You did not get a chance to evaluate against other ideologies available because they are not automatically served you. Sometimes, even worse, search engines start serving you negative about other ideologies that make you believe even more strongly in the one that is your favorite. Although, at first, as a user, you had a slight inclination towards the ideology “X” but, now you are completely biased because of such content served by the computers.

In my opinion, as it is well quoted in one of the papers published in 2018 — Baeza-Yates 2018 — “Any remedy for bias starts with awareness of its existence”. The developers must be trained and made aware of such biases and their consequences. Awareness about “confirmation bias” and its impact would be the first step towards finding the solution. Search engines, social media platforms, or tools algorithms should not apply any implicit filters before serving content to the users based on their past likes or dislikes. Sometimes, users are unknowingly trapped in a confirmation bias view and isolate themselves into some ideology.

As quoted in a paper published in 2011, Heath and Bizer, — “Just as the World Wide Web has revolutionized the way we connect and consume documents, so can it revolutionize the way we discover, access, integrate, and use data”. LinkedData uses the same principle as search engine indexing. Search engines can use Open LinkedData to bring information and facts about the topic shared by the experts and publishers. The results from these experts can help in reducing the confirmation bias as these results are not influenced by the user’s behavior rather it is based on an expert’s opinion.

2: Use of Semantic Web and Artificial Intelligence

Knowledge representation using semantic web technology can help organize all the information available over the internet. Organized knowledge representation can help “Artificial intelligence” to perform better and provide users with information which are “facts” or at least closure to “facts”. Whether or not the information is “fact”, can be categorized based on — multiple factors such as the publishers of the content, the organization which has published it, etc. Fact-checking processes using the Semantic Web and Artificial Intelligence can be implemented.

I will be delighted to hear your opinion about this challenge, its consequences, and its solutions. Again, the whole idea of this article is “NOT TO BE CONFIRMATION BIASED” while engaging any kind of research, articles, etc. Therefore, different thoughts and ideas are more welcomed than simply seconding my opinion on this topic 😂

References

- Baeza-Yates 2018. Bias on the Web. Communications of the ACM, 61(6).

- Modgil, Sachin et al. A Confirmation Bias View on Social Media Induced Polarisation During Covid-19. Information Systems Frontiers (2021): 1–25.

- Meppelink, Corine S. et al. “I was Right about Vaccination”: Confirmation Bias and Health Literacy in Online Health Information Seeking.” Journal of Health Communication 24 (2019): 129–140.

- DuBroff, Robert. “Confirmation bias, conflicts of interest and cholesterol guidance: can we trust expert opinions?” QJM : monthly journal of the Association of Physicians 111 10 (2018): 687–689 .

- Berners-Lee, Hendler, and Lassila. 2001. The Semantic Web. Scientific American, 284(5).

- Heath and Bizer. 2011. Chapter 1 of Linked Data: Evolving the Web into a Global Data Space. Synthesis Lectures on the Semantic Web: Theory and Technology, 1:1, 1–136. Morgan & Claypool.

0 Comments